Is Turnitin AI Detector Accurate? Real Cases & Data Insights

You’ve submitted an essay you wrote entirely on your own—no AI, no shortcuts. But then, Turnitin flags it as AI-generated. Now you're left wondering: How accurate is this detection system, really? You're not alone in asking that question.

In this article, we’ll break down what Turnitin’s AI detection tool is, how it works, and—most importantly—what factors might cause it to flag your writing. We'll also explore what real data and real experiences say about its reliability.

Let’s dive in and uncover what’s really behind Turnitin’s AI scores.

Is Turnitin’s AI Detector Accurate? – What the Official Data Shows

Turnitin officially introduced its AI writing detection technology in 2023 to identify content generated or paraphrased by AI tools like ChatGPT. Its goal is to support academic integrity while minimizing the risk of false accusations against students. To understand how accurate this detection system really is, we can look at the data and decisions Turnitin has publicly shared.

How Turnitin’s AI Detector Works

Turnitin’s AI writing indicator operates by analyzing the text in a submission and scoring segments based on how likely they are to be AI-generated. It compares the statistical patterns in word use, sentence structure, and phrasing to the typical writing behavior of large language models (LLMs) like GPT-3 and GPT-4. Since AI-generated writing tends to follow more predictable word patterns than human writing, the system evaluates how likely each segment of the document resembles that AI pattern.

Once the tool detects content it considers AI-generated, it applies a secondary layer of detection to determine whether that content has been paraphrased using AI tools (e.g., QuillBot). This two-step process helps identify both direct AI writing and reworded AI content.

What Turnitin Says About Accuracy

According to Turnitin’s own data, the system is built to maintain a false positive rate of under 1% for documents with more than 20% AI writing. This means that in 100 human-written papers, fewer than one should be wrongly flagged as AI-generated. To back this claim, Turnitin tested its system on 800,000 academic papers written before ChatGPT existed, using them as a baseline for genuine human writing.

However, to keep this false positive rate low, Turnitin accepts a tradeoff: it may miss around 15% of AI-generated content. For instance, if Turnitin indicates that 50% of a document is AI-written, the actual amount might be closer to 65%. This reflects the system’s cautious approach—erring on the side of not accusing real human writing.

(source: Turnitin)

Recent Adjustments for Accuracy

To improve the system’s performance and minimize errors, Turnitin has implemented several updates based on its internal testing:

Asterisk Warnings for Low AI Scores: AI scores below 20% are now marked with an asterisk in the report, signaling that these results are less reliable and may have a higher chance of false positives.

Minimum Word Count Raised: The word count threshold for running AI detection has been increased from 150 to 300 words. Turnitin found that longer documents yield more accurate detection results.

Changes to Intro and Conclusion Detection: Turnitin observed that false positives often occur at the start or end of papers (e.g., introductions or conclusions), so it revised how those parts are analyzed.

AI Paraphrasing Detection

Turnitin also includes a tool for detecting AI-paraphrased text, but it only runs after content is first flagged as AI-generated. This means the paraphrasing check does not affect the overall false positive rate. However, the paraphrasing detector can sometimes misidentify what kind of AI involvement occurred:

It might label AI-generated text as both AI-generated and AI-paraphrased (even if it wasn't paraphrased), or

It might fail to recognize that some AI-generated text was also paraphrased.

Is Turnitin’s AI Detector Biased Against Non-Native English Writers?

Concern Raised by Liang et al. (2023):

In 2023, researchers Liang and colleagues raised concerns that AI writing detectors may exhibit bias against non-native English writers, also known as English Language Learner (ELL) authors. Their conclusion was based on an analysis of 91 TOEFL practice essays, all of which were fewer than 150 words in length. The study sparked wide discussion in the academic community and prompted some Turnitin users to seek a more detailed response from the company.

Liang, W., Yuksekgonul, M., Mao, Y., Wu, E., & Zou, J. (2023). GPT detectors are biased against non-native English writers. arXiv preprint arXiv:2304.02819.

In response, Turnitin published its own study in October 2023 to investigate whether its AI writing detector demonstrates any statistically significant bias against ELL writers.

What Did Turnitin Find?

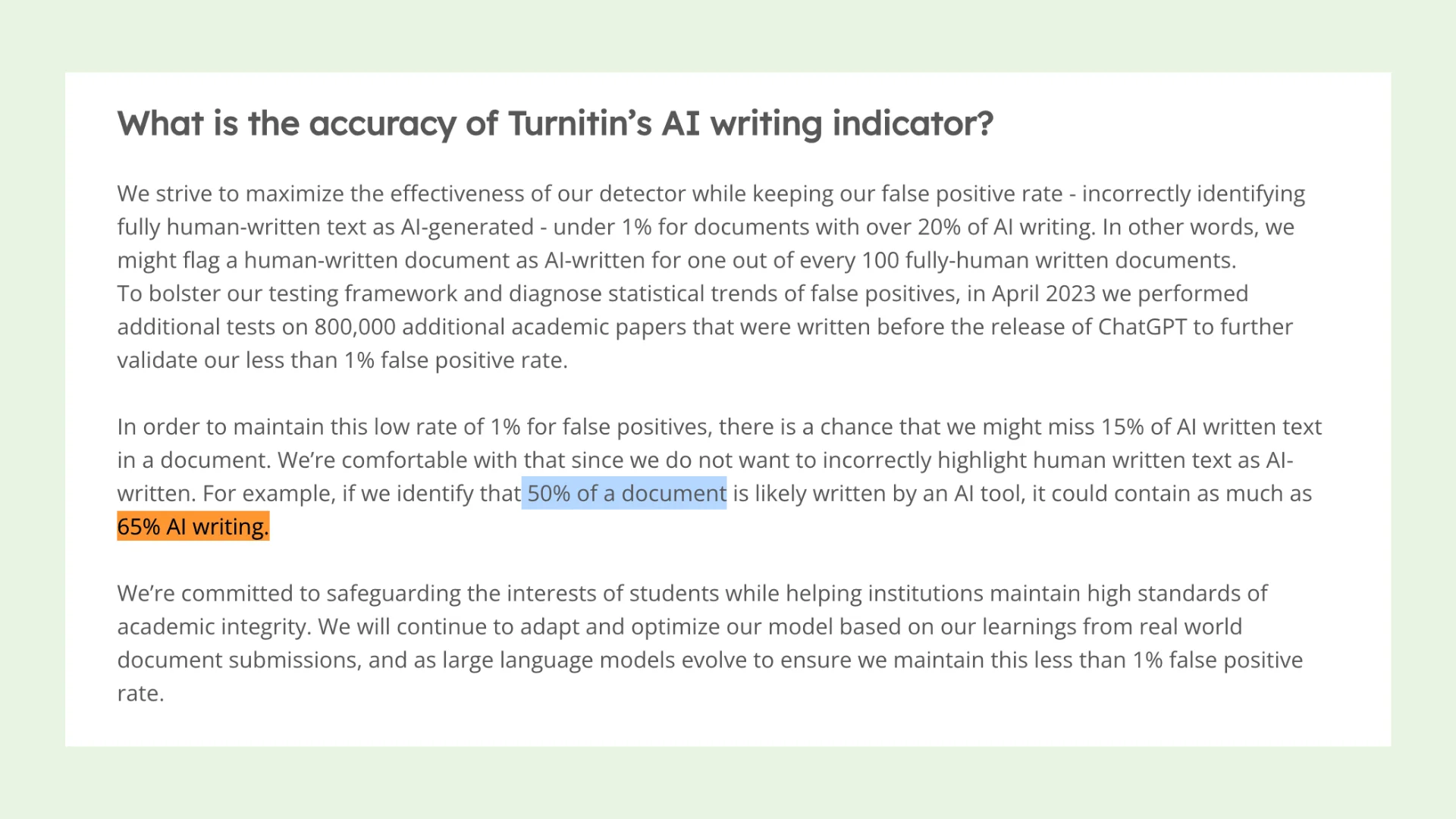

Turnitin tested its AI writing detector using thousands of authentic student essays, sourced from multiple open academic datasets. These included submissions by both native English speakers (L1 writers) and ELL writers (L2 writers). The samples were categorized by length:

Short texts: 150–300 words

Longer texts: 300 words or more

Here’s what they discovered:

For Longer Texts (300 Words or More):

The false positive rate—the likelihood that the detector incorrectly flags human-written text as AI-generated—was nearly identical for both ELL and native English writers. The difference was so minor that it was not statistically significant.

➡️ Conclusion: When documents meet the minimum word count, Turnitin’s AI detector does not show measurable bias against ELL writers.

For Shorter Texts (Under 300 Words):

The false positive rate increased overall, and the difference between native and non-native writers became more pronounced. Turnitin acknowledged that shorter samples lack enough linguistic information ("signal") for the AI model to accurately distinguish between human and AI-generated writing.

➡️ This makes the detector less reliable for all short submissions, and potentially more so for ELL writers.

As a result, Turnitin updated its system to only evaluate submissions with at least 300 words, aiming to reduce false positives and improve accuracy.

Final Conclusion:

Turnitin concludes that its AI writing detector does not demonstrate bias against non-native English writers, as long as the submission meets the 300-word minimum. The company also emphasized its ongoing efforts to improve the fairness and reliability of its system, especially as large language models (LLMs) continue to evolve.

How Are Universities Responding?

While Turnitin has defended the integrity of its AI writing detector, not all academic institutions are fully convinced. Several universities have raised concerns about the tool’s transparency, reliability, and potential impact on student trust. Some have even chosen to disable the feature altogether, citing risks of false accusations and lack of sufficient validation. Below are examples of how two U.S. universities—Vanderbilt University and Temple University—have evaluated and responded to Turnitin’s AI detection system.

Vanderbilt University’s Decision to Disable Turnitin’s AI Detector

Vanderbilt University decided to disable Turnitin’s AI detection tool due to concerns about its effectiveness and transparency. The tool was activated with less than 24 hours’ notice to customers, without an option to opt out. Vanderbilt questioned how the detector works, as Turnitin did not disclose detailed methods for identifying AI-generated text. While Turnitin claims a 1% false positive rate, Vanderbilt noted that with 75,000 papers submitted in 2022, this could mean around 750 false flags for AI use. Other universities have also reported cases of students wrongly accused of AI use, often linked to Turnitin’s detector. Additionally, studies have suggested the detector may be more likely to flag writing from non-native English speakers as AI-generated, raising fairness concerns.

Evaluation of Turnitin’s AI Writing Detector at Temple University

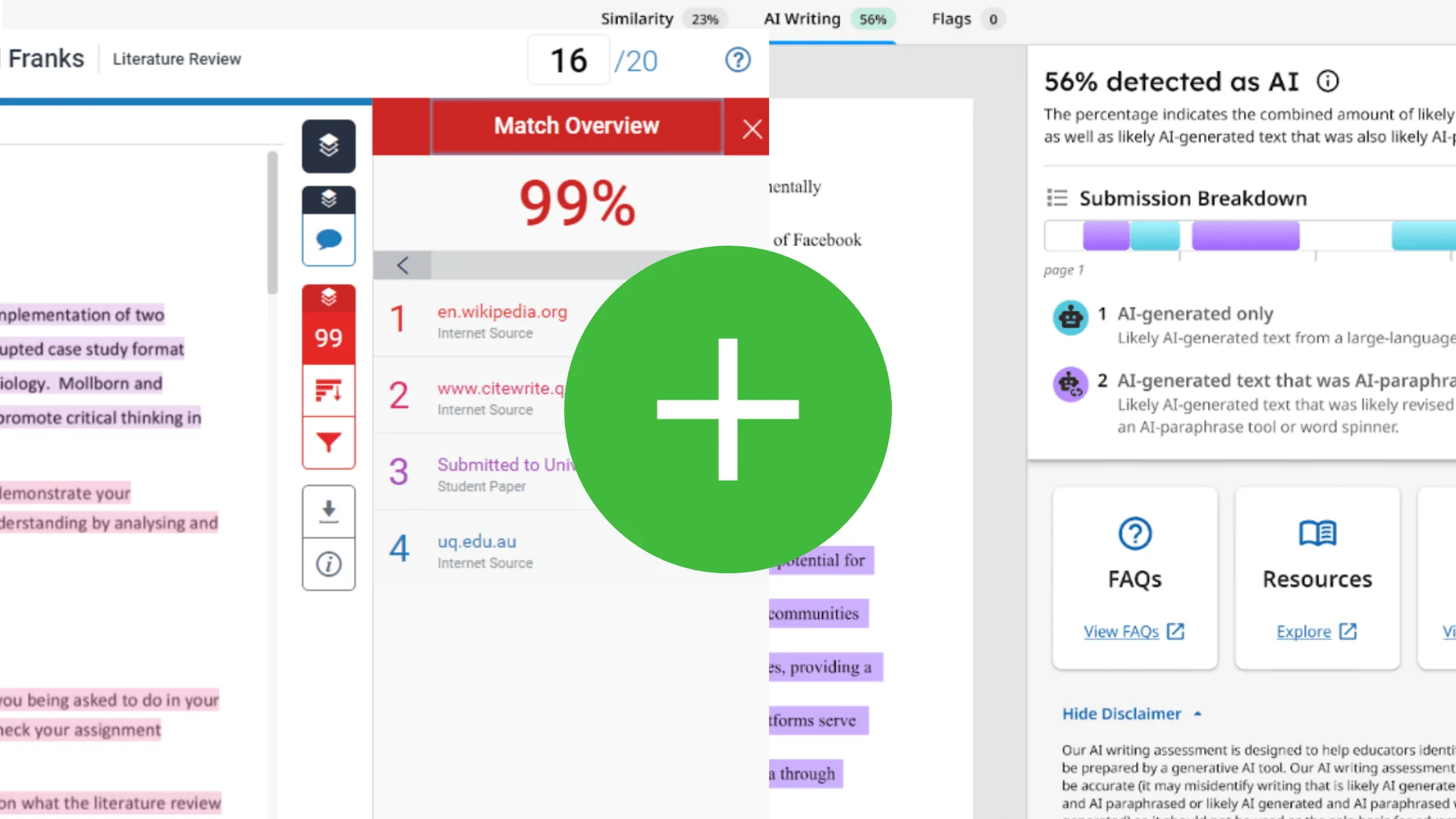

Researchers at Temple University’s Center for Student Success and the Center for the Advancement of Teaching (Temple CAT) conducted a study on Turnitin using 120 text samples divided into four categories: fully human-written, fully AI-generated, disguised AI-generated (texts paraphrased to evade detection), and hybrid texts combining AI and human contributions. These hybrid texts modeled real-world and educational scenarios, such as AI-generated content edited by humans or human-written content refined by AI. All samples were analyzed using Turnitin’s AI detector.

Results:

Human-written texts: 93% accurately identified.

Fully AI-generated texts: 77% accurately detected.

Disguised AI-generated texts: Detection dropped to 63%.

Hybrid texts: Only 43% correctly identified; the detector’s flagging poorly matched actual AI-generated portions.

Overall, Turnitin’s AI detector achieved approximately 86% accuracy in detecting AI use, but showed a 14% error rate, particularly with disguised and hybrid texts.

Discussion and Implications:

Turnitin’s AI detector reliably identifies purely human-written work and is useful when AI use is completely prohibited, as a 0% AI score strongly indicates human authorship. However, the tool is designed to minimize false positives, which sometimes leads to overestimating human-generated content and produces some inaccuracies. Crucially, the detector’s flag reports do not accurately pinpoint which parts of a paper are AI-generated, especially in hybrid texts—an increasingly common format in educational settings.

Unlike plagiarism detection, AI-generated text has no direct source to link to, so flagged sections do not provide original source references. This lack of verifiable links limits instructors’ ability to independently confirm flagged content, requiring trust in Turnitin’s algorithm without transparent evidence.

What Are Regular Users Saying About Turnitin?

Now let’s look at how everyday users—especially students—are reacting to Turnitin’s AI detection tool. While some acknowledge its potential, many express serious doubts about its accuracy and fairness. Online discussions, especially on platforms like Reddit, show growing frustration with false positives and inconsistent results. Users frequently report that their original, human-written work is being wrongly flagged as AI-generated.

User Concerns on Reddit

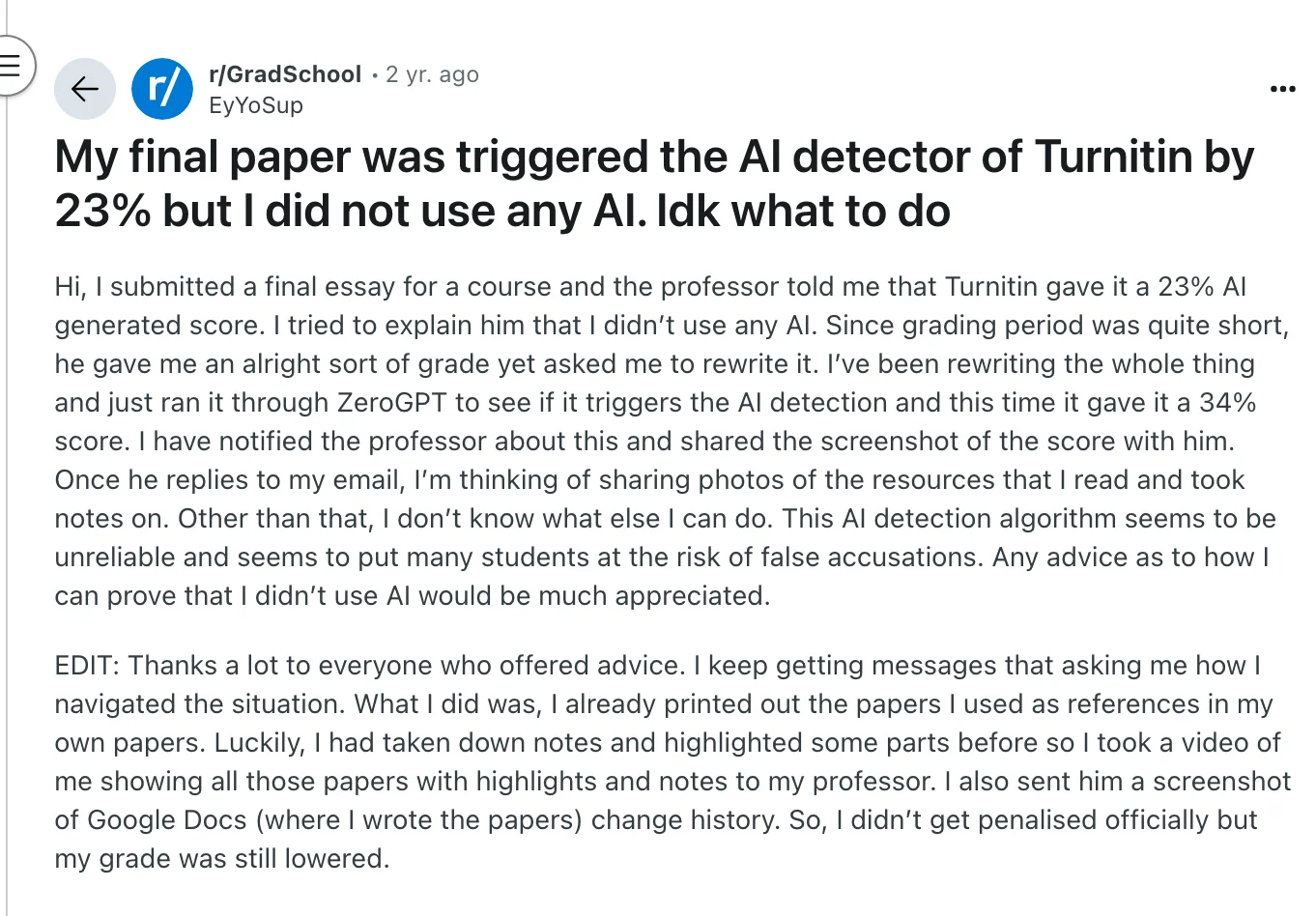

Several Reddit users have shared personal experiences of being unfairly flagged by Turnitin’s AI detector:

False Positives: EyYoSup reported that their final paper was flagged as 23% AI-written, even though they used no AI at all. Another was shocked to receive a 48% AI score for content based entirely on personal analysis and research from reputable websites.

Inaccuracy Acknowledged by Schools: Some schools appear to recognize these issues. One commenter said their institution uses Turnitin's AI detection results only for reference, not as final evidence, acknowledging that no current AI detector is 100% reliable.

Opinion

These user reports suggest a gap between Turnitin’s claims and students’ real-world experiences. While the tool may work well under certain conditions, its tendency to misclassify genuine human writing—especially when students paraphrase or summarize research—raises fairness concerns. Relying too heavily on such tools for grading or academic decisions, especially without transparency or appeal processes, risks harming students who haven't done anything wrong.

Why Your Essay Might Be Detected as AI

Many students feel confused and frustrated when Turnitin flags their original work as AI-generated. If this has happened to you, you're definitely not alone.

Below are some specific reasons your human-written essay might still be flagged:

1. Overly Formal or Generic Language

AI-generated text often sounds polished and neutral. If your essay uses perfect grammar, lacks contractions, or reads like a textbook, the detector might misread it as AI-generated—even if you wrote it yourself.

2. Lack of Personal Voice or Sentence Variation

AI tools tend to produce sentences that follow predictable structures. If your writing lacks variation, nuance, or a clear personal voice, it may resemble these machine-like patterns.

3. Heavy Paraphrasing of Online Sources

Even if you research thoroughly and paraphrase everything yourself, summarizing popular online content too closely can mimic the style of AI, which also pulls from publicly available data.

4. Short Length or Low Word Count

Turnitin has stated that essays under 300 words are more likely to be inaccurately flagged. Short texts give the AI detector less context to work with, increasing the risk of misidentification.

5. Repetitive Structure or Disconnected Ideas

AI writing can sometimes appear repetitive or overly logical but lacking in depth. If your work includes lists, repeated phrases, or weak transitions, it might mirror the flow of AI-generated writing.

6. Popular or Common Topics

Subjects that are widely discussed online—like climate change, the benefits of social media, or school uniforms—are familiar to AI models. If your argument follows common patterns or uses well-known phrases, the detector may label it as AI-written.

7. Citation and Reference Style

In some cases, Turnitin’s detector may flag certain citation styles or reference lists that closely match AI training data. This is rare, but it can happen—especially if your sources are widely used or phrased in a generic way.

For Students: What to Do If You're Flagged by Turnitin's AI Detector

Being flagged for AI use when you didn’t actually use AI can feel frustrating and unfair. But don’t panic—there are steps you can take to explain and defend your work.

1. Stay Calm and Review the Report

First, review the score in the Turnitin report carefully. Turnitin doesn’t mark your paper as definitively AI-written—it gives a percentage of how much it suspects might be AI-generated. Check what parts were flagged and ask yourself: did anything sound too polished, too repetitive, or too similar to typical AI output?

2. Gather Your Writing Process Evidence

The best way to prove your work is original is to show how you created it:

Show your drafts – If you wrote your essay in Google Docs or Word, use the version history or track changes feature to prove your writing process.

Take screenshots or videos – Documenting your handwritten notes, outlines, or printed research with highlights can support your case.

Provide your references – If you used outside sources, show them to your instructor with notes on how you used the information.

3. Talk to Your Instructor

Reach out respectfully. Explain that the content is your own and share the evidence of your writing process. Let them know you’re happy to walk them through how you developed your ideas. Most instructors appreciate transparency and effort over confrontation.

4. Ask for a Review or Re-evaluation

If your school allows it, you may be able to request a second opinion or an academic appeal. Don’t be afraid to ask for clarity on the policy and your rights as a student.

How Should Teachers Use Turnitin’s AI Detection Reports?

Teachers should approach Turnitin’s AI detection reports with caution, context, and communication—not as a final verdict, but as one piece of information in a larger academic picture. Here’s a breakdown of how to use these tools responsibly and fairly:

1. Don’t Rely on the Score Alone

Turnitin’s AI score is not proof of misconduct. It’s meant to assist, not replace, a teacher’s judgment. A high percentage doesn't automatically mean the student cheated.

2. Compare With Past Work

Look at the flagged assignment alongside the student’s previous submissions. Are there differences in tone, structure, vocabulary, or complexity? Sudden shifts may raise valid questions—but only when taken in context.

3. Cross-Check With Other Tools

Running the same text through different AI detectors can give additional insight. Results may vary, but if several tools raise the same concerns, it might be worth a deeper look.

4. Talk With the Student

Have a respectful conversation. Ask about their writing process, sources, and timeline. Show them the flagged sections. If they can provide drafts, notes, or version history (e.g., in Google Docs), that’s valuable context.

5. Offer a Chance to Revise

Unless there’s clear evidence of intentional misuse, it’s often best to give students a chance to revise or rewrite. Many students may not realize how their writing could be misread by an algorithm.

6. Follow Institutional Policy

If you believe misconduct occurred and the student doesn’t provide a reasonable explanation, follow your school’s academic integrity process—but keep in mind the limitations of AI detection tools and the potential for false positives.

7. Be Proactive About Expectations

Set clear guidelines at the start of the course regarding AI usage: what’s allowed, what’s not, and how to cite tools like ChatGPT if permitted. Having these expectations upfront avoids confusion and builds trust.

FAQ

Q: Is it possible for Turnitin to be wrong?

A: Yes, Turnitin can make mistakes. Its plagiarism and AI detection tools are helpful, but they’re not perfect. Sometimes, original work is flagged incorrectly, especially if it resembles common writing patterns or heavily cited information.

Q: Is 36% on Turnitin okay?

A: It depends on the assignment. For plagiarism detection, a 36% Turnitin score might be acceptable if most of it comes from properly cited quotes or references. For AI detection, it’s more complicated—30% may or may not be a concern depending on what was flagged and how the instructor interprets it.

Q: Is Turnitin really reliable?

A: Turnitin is a widely used tool, but it’s not foolproof. It’s best at detecting direct text matches for plagiarism, but AI detection is newer and still being improved. Educators are advised to use it as a guide—not a final judgment.

Q: Is 70% on Turnitin bad?

A: A 70% similarity score for plagiarism usually raises red flags and needs close review. For AI detection, a 70% score doesn’t automatically mean misconduct, but it will likely lead your instructor to investigate further.

Q: How precise is the Turnitin AI detector?

A: It’s reasonably accurate with clearly AI-written or human-written texts, but it struggles with hybrid writing—where human and AI contributions are mixed. Accuracy drops further when AI content is paraphrased or heavily edited.

Q: How accurate is the Turnitin AI detector compared to others?

A: According to independent studies, Turnitin performs better than many free detectors, but it’s still not perfect. In mixed or paraphrased cases, its accuracy can fall to below 50%. Other tools like GPTZero or Originality.ai may offer different results but also have limitations.

Q: Can Turnitin detect AI under 300 words?

A: Not reliably. Short responses don’t provide enough context for Turnitin’s AI detector to make a strong determination. In these cases, the risk of false positives increases.

Final Thoughts

Turnitin’s AI detector isn’t always accurate, and being flagged doesn’t automatically mean misconduct. These tools are still developing and can misidentify human writing—especially when it mirrors patterns often seen in AI-generated content.

Both students and educators should approach AI detection results with caution, context, and open dialogue. By understanding how these tools work and why false positives occur, we can move toward a more fair, informed, and thoughtful use of AI in education.