Can Turnitin Detect AI? Models, Reports & Accuracy Explained

With AI writing tools like ChatGPT becoming more common, many people are wondering: can Turnitin actually detect AI-generated content?

In this article, we’ll break down how Turnitin’s AI detection works, what it can and can’t catch, and what that means for academic honesty today.

Does Turnitin Detect AI?

Yes.

Turnitin officially launched its AI writing detection tool in 2023. This feature is built directly into the Similarity Report that many instructors already use to check for plagiarism. But instead of just looking for copied text, this tool checks whether the writing may have been generated or paraphrased using large language models like ChatGPT or tools like Quillbot.

What Content Does It Actually Detect?

Turnitin’s AI model is trained to flag content that appears to be written by large language models (LLMs) such as ChatGPT, Claude, and others. It analyzes sentence structure, vocabulary patterns, and tone — all of which can suggest AI-generated content.

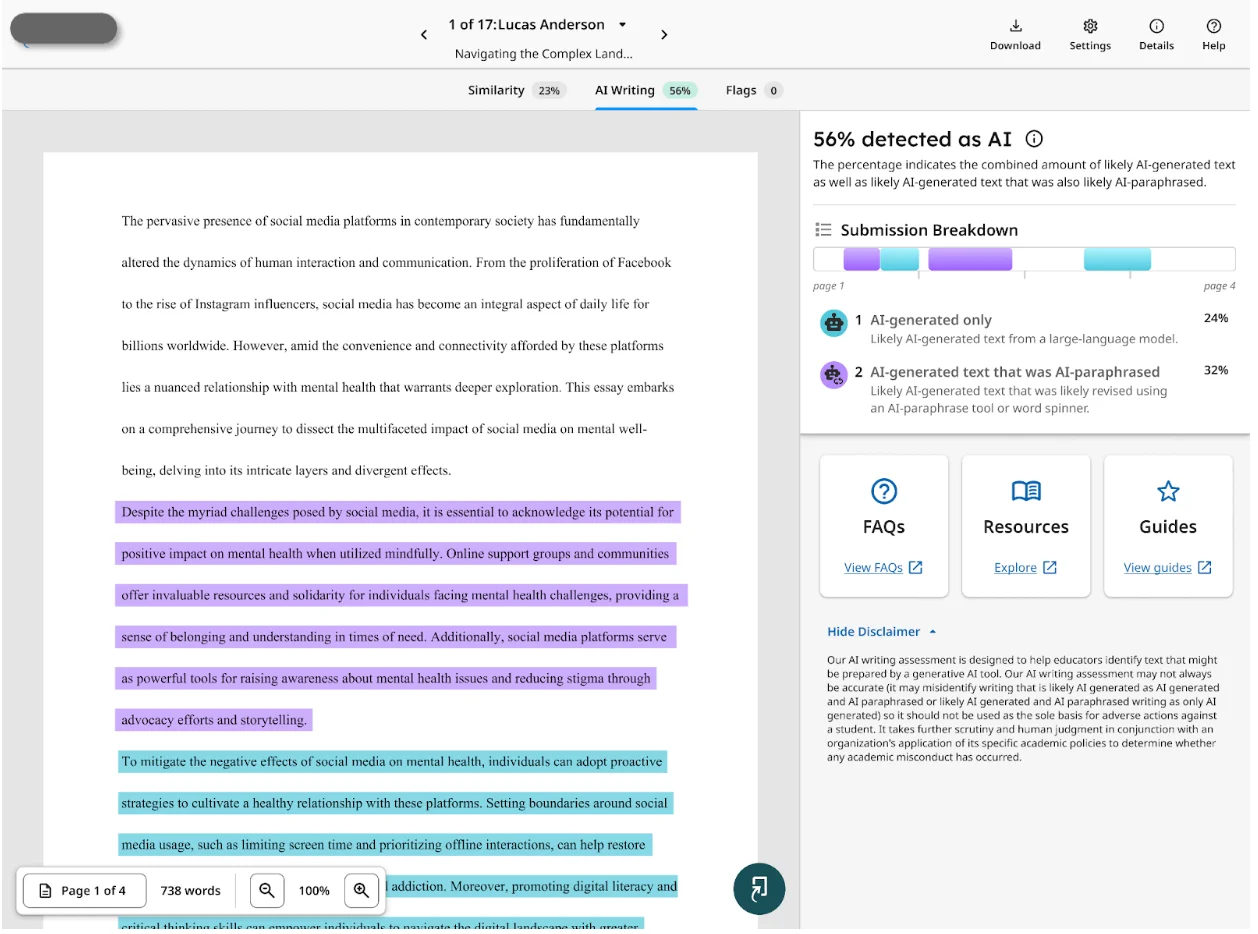

The report breaks results down into two categories:

✅ AI-Generated Text

Content that looks like it was written directly by an AI tool.🔄 AI-Paraphrased Text

Content that seems to have been generated by AI and then reworded using a paraphrasing tool like Quillbot or an AI spinner.

So yes — Turnitin is getting more advanced. It’s not just checking for full AI-written essays anymore. It’s also detecting smart edits made by machines.

Supported Languages (As of July 2025)

Currently, Turnitin’s AI detection works for English, Japanese, and Spanish.

However, the AI paraphrasing detection — which spots content that’s been rewritten using tools like Quillbot — is currently only available for English prose.

Only instructors and administrators can view the AI writing detection report — students don’t see this feedback by default.

What AI Detectors Does Turnitin Use?

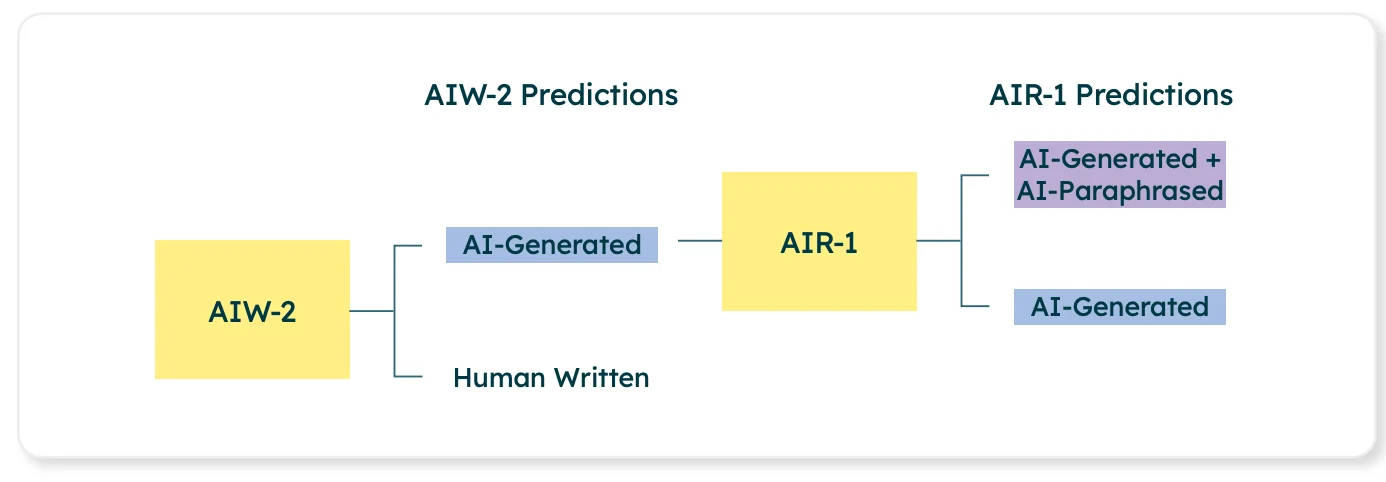

Turnitin doesn’t rely on third-party AI detection services like many other platforms. Instead, it developed its own in-house tools, known as AIW (AI Writing detection) and AIR (AI Rrewriting detection). These are sophisticated systems trained specifically to analyze academic writing for traces of AI-generated or AI-paraphrased content — and yes, there’s a big difference between the two.

AIW is designed to identify the kind of patterns that large language models like GPT-3, GPT-4, or Gemini typically produce. These models generate content by selecting the most probable next word in a sequence, based on massive amounts of training data from the internet. While that may sound smart, it results in writing that’s strangely consistent — too consistent. Human writing, on the other hand, is full of quirks, inconsistencies, and unpredictable phrasing. That contrast is what Turnitin’s AIW model is trained to detect.

So Turnitin can detect ChatGPT content.

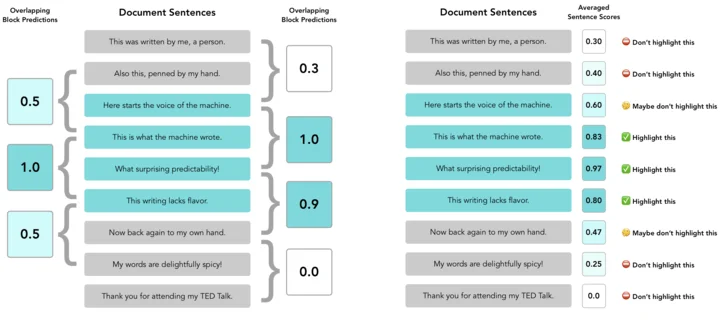

This detection system isn’t guessing — it’s making probabilistic judgments based on how likely a sentence was generated by an LLM. The closer a sentence gets to that predictable, mechanical structure, the higher the likelihood it’s flagged. But Turnitin doesn’t flag individual words. It evaluates full sentences and segments, each about 5 to 10 sentences long, overlapping to maintain context. Every segment is scored between 0 and 1, with 1 indicating a high probability of AI generation.

But detection doesn’t stop there. If a segment is flagged as AI-written, it’s then passed through Turnitin’s second tool, AIR, which evaluates whether the content has been paraphrased by an AI rewriter like Quillbot or Grammarly’s paraphrasing tool. This step is important because many students now use AI to rewrite AI content to evade detection. AIR’s job is to catch that layer of machine manipulation.

Both models were trained on a broad mix of data — not just AI-generated text, but real student essays from a variety of disciplines, education levels, and linguistic backgrounds. That kind of diverse training set helps the model avoid bias and reduces false positives, particularly for students who speak English as a second language or write in less common academic styles.

In short, when a student submits a paper, Turnitin slices it up, analyzes each piece for signs of machine-generated structure, evaluates whether AI paraphrasing tools were used, and then produces a visual report for instructors. The report doesn’t just flag text — it breaks it down into what was likely AI-written and what was likely paraphrased by another machine.

What Kind of Files Can Turnitin’s AI Detector Check?

Turnitin’s AI writing detection isn’t just about what you write — it’s also about how you submit it. If your file doesn’t meet specific technical requirements, Turnitin won’t run an AI check at all. So here’s what you need to know if you want your submission to be scanned for AI-generated content.

First, the document has to contain enough text. Turnitin now requires a minimum of 300 words — and that’s 300 words of prose, not lists, code, or outlines. Why the bump from the previous 150-word minimum? According to Turnitin, their internal testing shows that a bit more content leads to much more accurate detection. That’s because longer segments help the model recognize patterns typical of AI writing.

At the same time, there’s also a maximum cap of 30,000 words. Go beyond that, and Turnitin will skip the AI scan entirely. This word limit strikes a balance between detailed analysis and keeping the model efficient.

As for file types, Turnitin supports standard academic formats:

✅ .docx

✅ .pdf

✅ .txt

✅ .rtf

What won’t work? Anything that can’t be read as plain text prose — so skip the Google Docs links, spreadsheets, or image-based PDFs.

📌 File size also matters: the limit is under 100 MB, which is generous for text-based files. But if your file includes lots of embedded images, formatting, or heavy elements (like scanned pages), it could exceed the size limit and be rejected.

Finally, there’s the language filter. While AI detection is offered in English, Japanese, and Spanish, the AI paraphrasing detection — which flags text spun through tools like Quillbot — is currently only available for English.

So in short: if your file is under 100 MB, between 300 and 30,000 words, in a readable document format, and written in one of the supported languages, Turnitin will process it for AI detection. Otherwise, it’s either skipped — or won’t trigger the AI scan at all.

How Accurate Is Turnitin’s AI Detection Report?

Turnitin’s AI writing detection feature is now a built-in part of its Similarity Report — but how reliable is it, really?

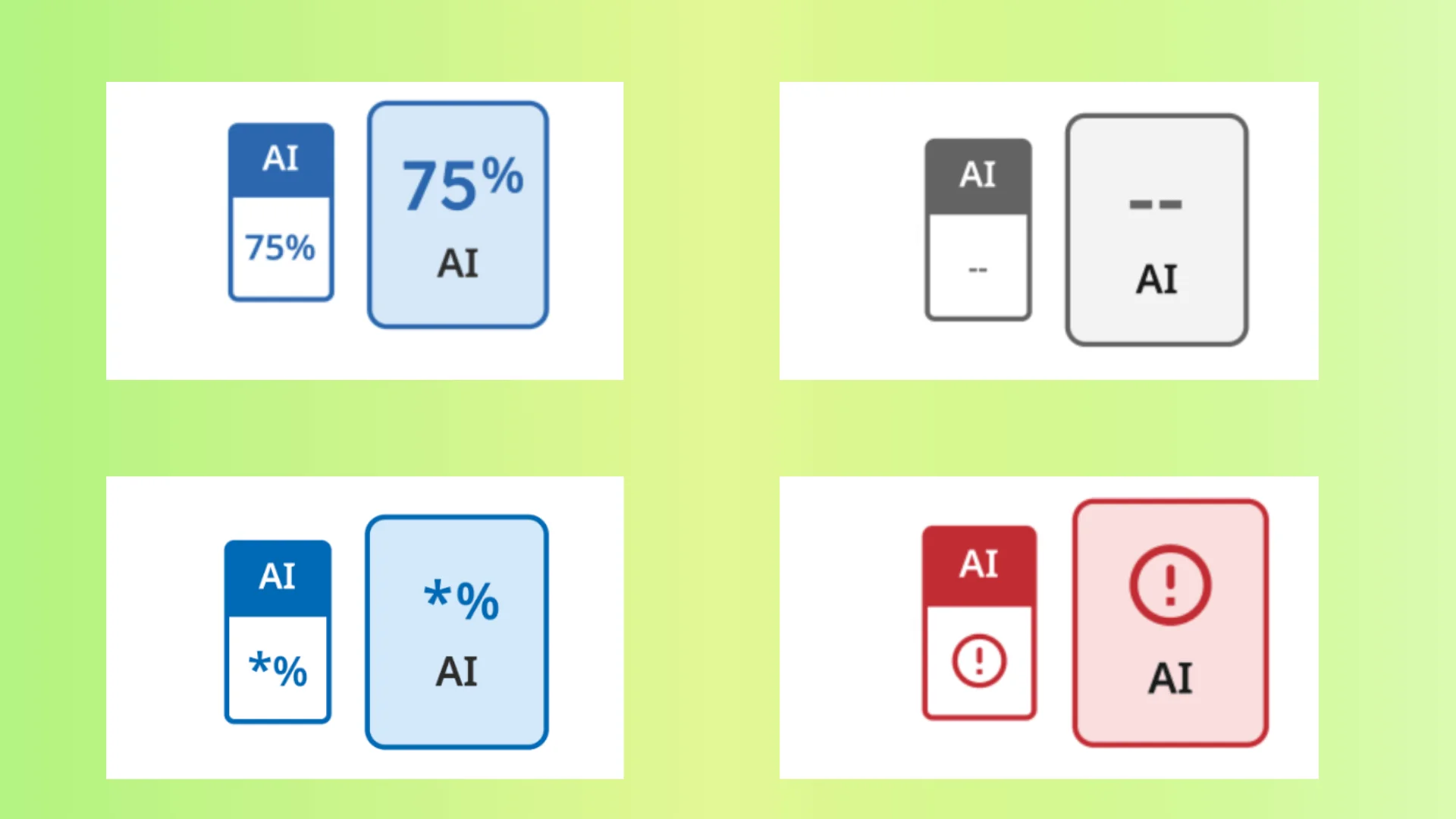

When a document is submitted, and once it passes Turnitin’s technical checks (minimum word count, file type, language, etc.), the system processes the text and displays an AI score, represented as a blue badge with a percentage. That percentage reflects the amount of qualifying prose that Turnitin’s model predicts was generated by AI — not the percentage of the entire submission.

However, if that score is below 20%, Turnitin flags it with an asterisk (*%) instead of showing a number. Why? Because the platform acknowledges that low AI scores are less reliable and may include a higher rate of false positives. It’s a quality control measure — and an honest one.

If Turnitin cannot process the document (e.g., wrong file type, not enough words, or submitted before AI detection launched), you’ll see a gray dash (– –) instead of a score. In rare cases, if something breaks in processing, you’ll get an error (!) symbol.

Turnitin’s Accuracy Rate: The Data Behind It

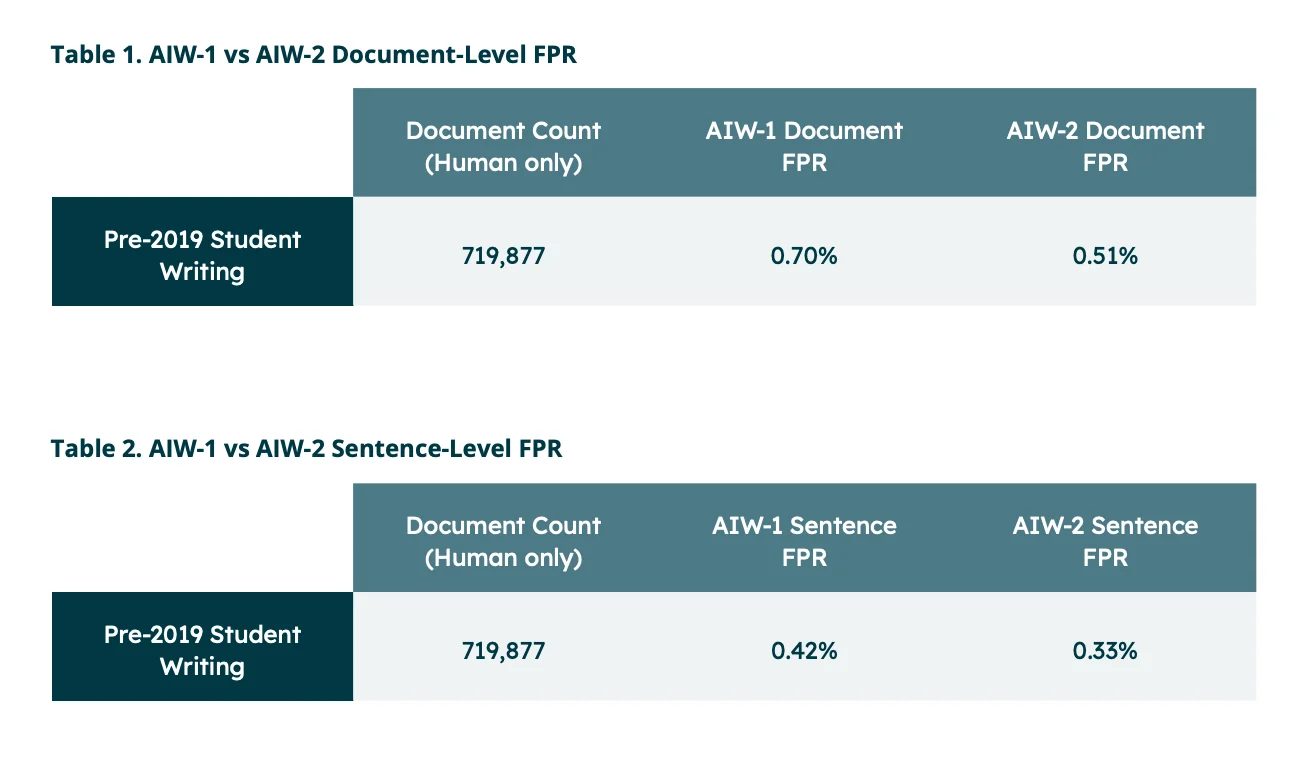

Turnitin states that it maintains a false positive rate (FPR) of under 1% — meaning that for every 100 fully human-written papers, fewer than one is mistakenly flagged as AI-generated. That’s their gold standard, and it’s backed by large-scale internal testing.

In fact, in April 2023, Turnitin ran an extended benchmark using 800,000 pre-ChatGPT academic papers to ensure that their model doesn’t mistake traditional student writing for AI output. That testing phase helped the company refine several detection methods and further train its classifiers to avoid false alarms.

But here’s the tradeoff: to keep the false positive rate low, Turnitin is okay with missing up to 15% of actual AI-generated text. So, if the AI detector flags 50% of a paper, the true AI-written content could be closer to 65%. This conservative approach prioritizes student fairness over aggressive detection — and that’s deliberate.

Turnitin admits it errs on the side of caution because wrongly accusing a student of AI use can carry serious consequences. As a result, it favors precision (not flagging human-written work) over recall (detecting every bit of AI).

Model Improvements That Enhance Accuracy

Turnitin is actively evolving its detection protocol, based on real-world data. Key updates include:

Raising the minimum word count for AI detection from 150 to 300 words to reduce noise and improve reliability.

Suppressing AI scores below 20% due to their high variability and false positive potential.Adjusting how intros and conclusions are analyzed, since early testing showed those areas were more likely to trigger false alarms — possibly due to their generic phrasing or predictable patterns.

Is Turnitin’s AI Detection Free?

Turnitin’s AI detection was free during its preview phase to help educators adapt quickly to new challenges. However, starting January 2024, Turnitin moved to a paid licensing model to support ongoing research, development, and infrastructure improvements. This means AI detection features are no longer free and require an additional cost beyond the basic license.

FAQ

Q1: What detectors does Turnitin use to check AI?

Turnitin uses two in-house tools: one to detect AI-written text (AIW) and another to spot AI-paraphrased content (AIR).

Q2: Will Turnitin tell you if it detects AI?

Yes, but only teachers and admins see the AI report. Students don’t get this feedback automatically.

Q3: How much AI is acceptable in Turnitin?

There’s no fixed limit. Schools decide what level of AI use is allowed.

Q4: How to avoid AI detection on Turnitin?

Write in your own voice, deeply revise any AI-assisted text, and avoid relying on AI for full essays or paraphrasing tools.

Final Thought

So, can Turnitin detect AI? The short answer is yes—but it’s not perfect and it’s always evolving. AI tools keep getting smarter, and Turnitin is working hard to keep up. At the end of the day, it’s just one tool in the mix. What really matters is how students and teachers use it—and staying honest and informed is the best way to keep things fair.