What AI Detector Does Turnitin Use? Models, Accuracy & More

With AI writing tools like ChatGPT everywhere, it’s no surprise that Turnitin stepped in with its own AI detectors.

But unlike those free tools that give vague answers, Turnitin’s system is a bit more serious—and a lot more complex under the hood.

I dug into their official whitepaper and tested how it works, so in this post, I’ll walk you through what models Turnitin actually uses, how they flag AI and AI-paraphrased writing, and what makes them different from the rest.

1. What AI Detector Does Turnitin Use?

According to Turnitin’s official white paper (the source this article is based on), Turnitin’s AI detection system relies on two key deep-learning models:

AIW (short for AI Writing) is the model that checks whether a piece of writing was generated by an AI.

AIR (short for AI Rewriting) is a newer model that looks specifically for writing that’s been paraphrased or rewritten by AI tools to sound more human.

Both are built using transformer-based architecture — the same kind of tech behind modern AI like ChatGPT.

Turnitin first launched its AI detection tool—AIW-1—in April 2023. That model was updated and replaced by AIW-2 in December 2023. Then, in July 2024, AIR-1 was added to detect more subtle use of AI, like when a student uses an AI tool just to rephrase existing content.

Together, these models help instructors spot text that might be AI-written or AI-modified, giving deeper insights into the originality of student work.

Q: Can individuals use Turnitin’s AI detectors?

Turnitin’s AI detection is part of their originality service, which is only available to institutions like schools and colleges. And all Turnitin's service is paid.

Reports are accessible only to instructors and administrators. So, if you’re a student or individual, you can’t directly use Turnitin or its AI detectors. However, some alternative tools are available online, including community-shared Discord links or other AI detection apps.

2. How Was Turnitin’s AI Detector Developed?

First, There Was AIW-1

Turnitin’s first AI writing detector was called AIW-1, and it launched in April 2023. It worked by scanning writing for patterns that typically show up in AI-generated text — things like overly smooth structure, lack of nuance, or repetitive phrasing.

If it found enough of those patterns in a document, it would flag the text as likely AI-written.

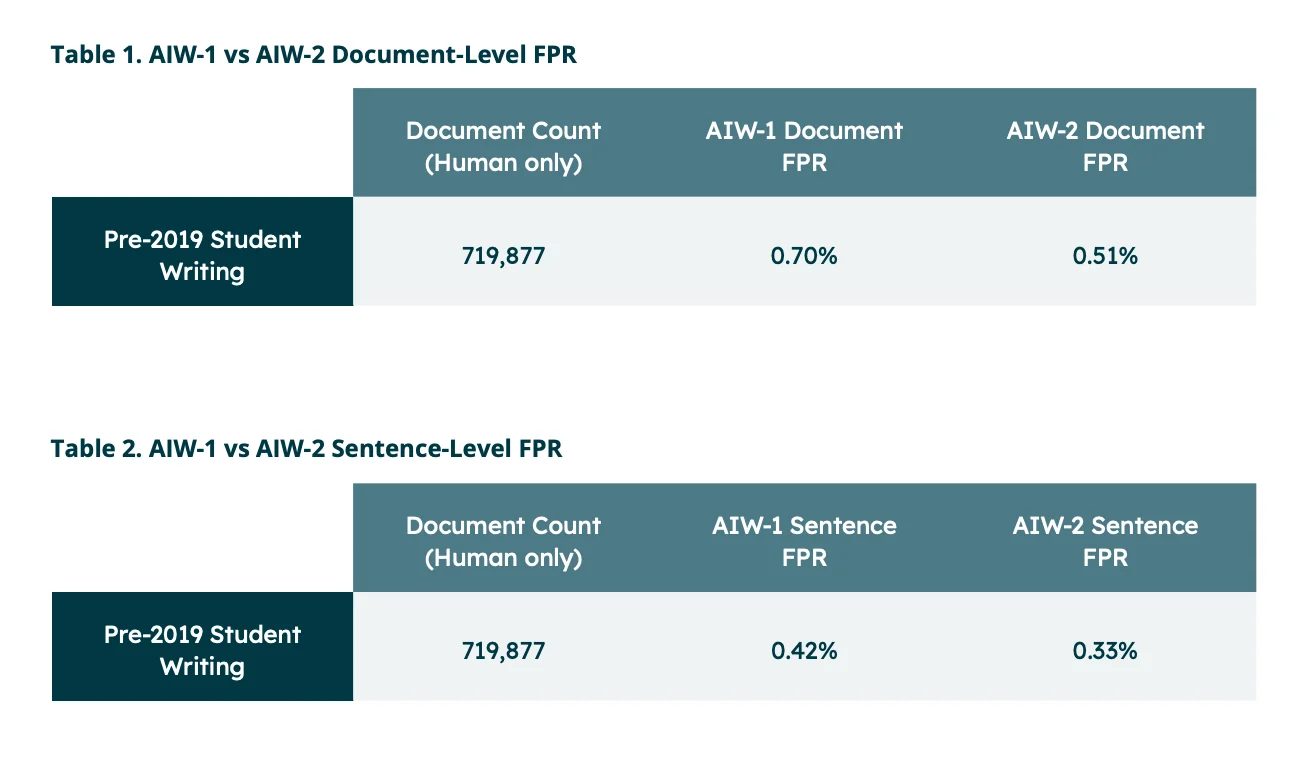

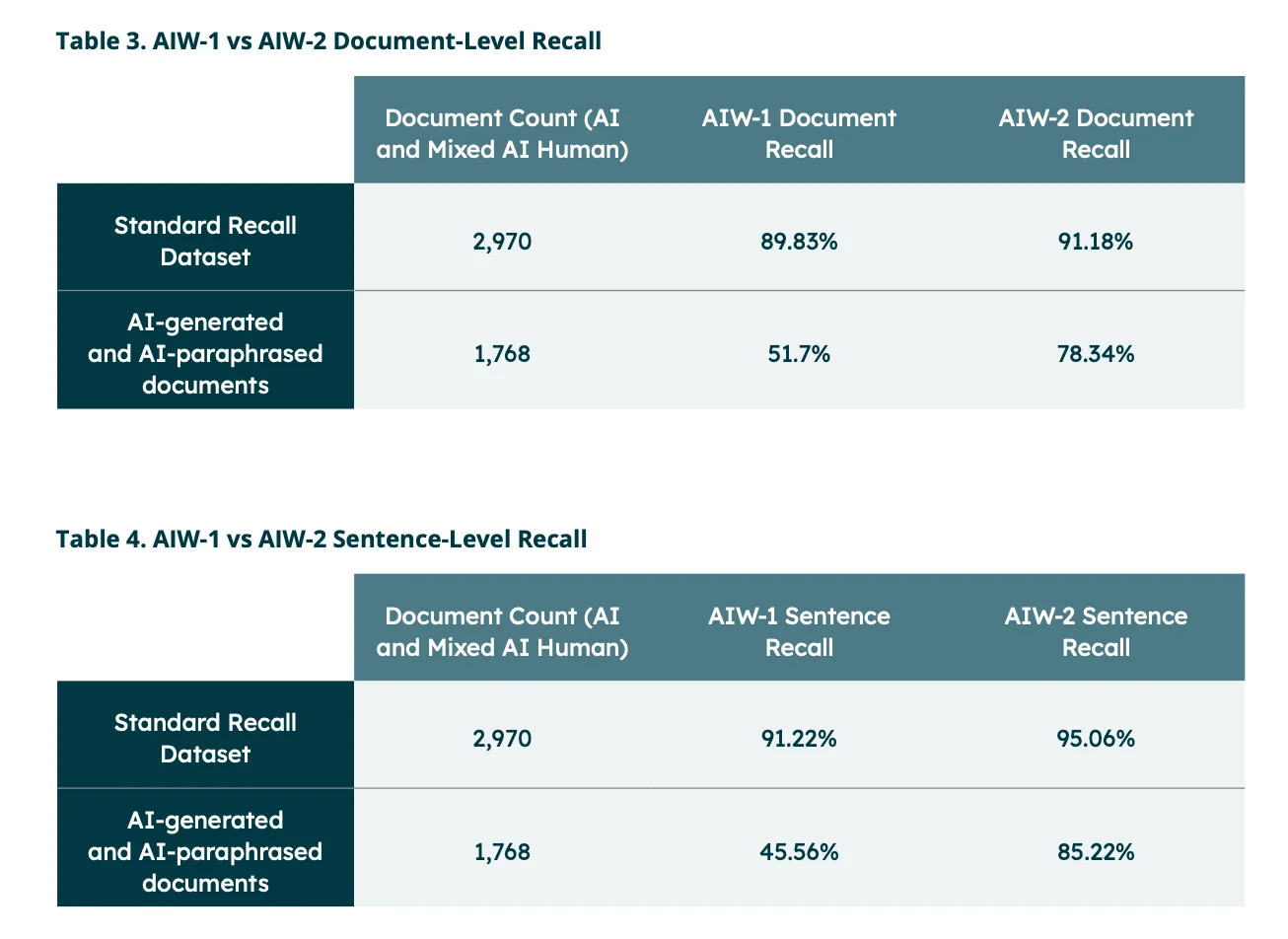

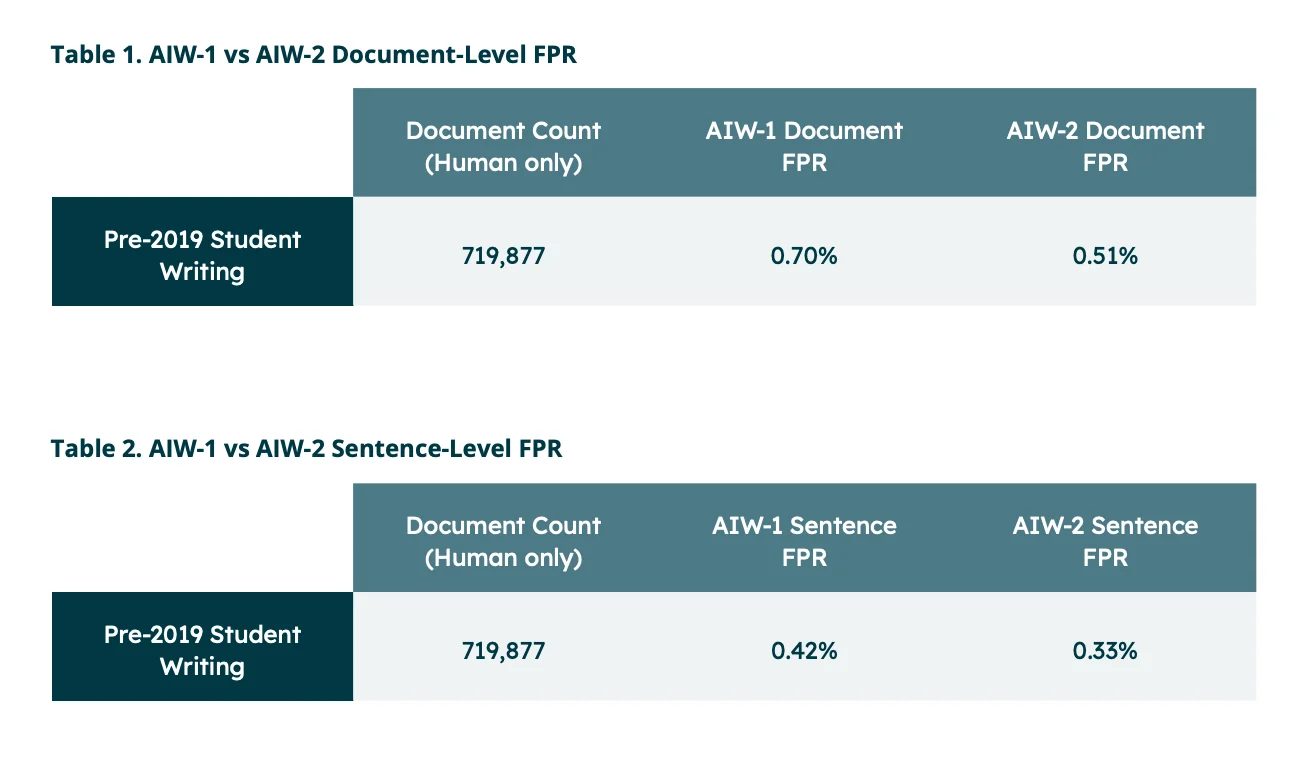

One of the strengths of AIW-1 was that it had a very low false positive rate — meaning it was careful not to wrongly accuse human writing of being AI-generated. As long as at least 20% of the document was flagged, the chance of a false alarm was less than 1%.

This helped teachers trust the results, without overreacting to small, borderline cases.

Then Came AIW-2 — A Smarter Upgrade

But here’s the thing: AI tools were getting better — especially the kind that rewrite or paraphrase text. These tools take AI-written sentences and run them through another layer to make them sound more human. That made detection much harder.

So Turnitin responded with AIW-2, which launched in December 2023. It’s a smarter model trained on a wider variety of writing examples:

Regular AI-generated text (like from ChatGPT)

Authentic student writing from different backgrounds and subjects

Text that was AI-generated, then reworded by an AI paraphraser

Mixed documents with both human and AI content

AIW-2 was also built on a transformer-based deep learning architecture, similar to the models behind tools like GPT-4. This gives it the power to recognize more complex patterns in sentence structure, grammar, and tone — things that simpler models often miss.

📊 By June 2024, Turnitin reported using AIW-2 on over 250 million student submissions (Turnitin, 2024). That gives it a massive training and testing base to work from.

In short, AIW-2 was a leap forward: it improved detection accuracy, reduced false positives, and made the system more robust against paraphrased AI content.

So far, we’ve talked about detecting AI writing in general. But what about cases where students try to mask AI text using paraphrasing tools? That’s where Turnitin’s newest model — AIR-1 — comes in.

3. The AIR-1 Model: How Does It Detect AI Paraphrasing

More and more students (and writers in general) are using AI paraphrasers — often called “text spinners” — to rewrite AI-generated content. These tools don’t create writing from scratch like ChatGPT. Instead, they reword existing text to try and hide its origins.

But here’s the twist: paraphrasing tools leave behind different statistical fingerprints than full-blown AI writing models.

So, Turnitin needed a specialized model to catch those patterns — and that’s how AIR-1, short for AI Rewriting detection, was born in July 2024.

What Is AI Paraphrasing, and Why Is It Tricky?

Paraphrasing tools (often powered by AI themselves) take text written by an LLM like ChatGPT and reword it. The goal? Make it sound less robotic and more like a student’s original voice. These tools don’t generate new ideas — they just remix what’s already there.

From a detection standpoint, that makes things harder. The structure and vocabulary may change, but the underlying statistical signature of AI writing often remains.

How AIR-1 Works

Think of AIR-1 as a detective trained to spot reworded AI content. It doesn’t just look at what’s being said — it looks at how it’s being said, using subtle language cues and patterns that are typical of paraphrased AI text. It doesn’t just look at word choice or phrasing — it analyzes deeper patterns that AI paraphrasers tend to leave behind. This includes the rhythm of the text, the way ideas are restructured, and even how sentence complexity shifts.

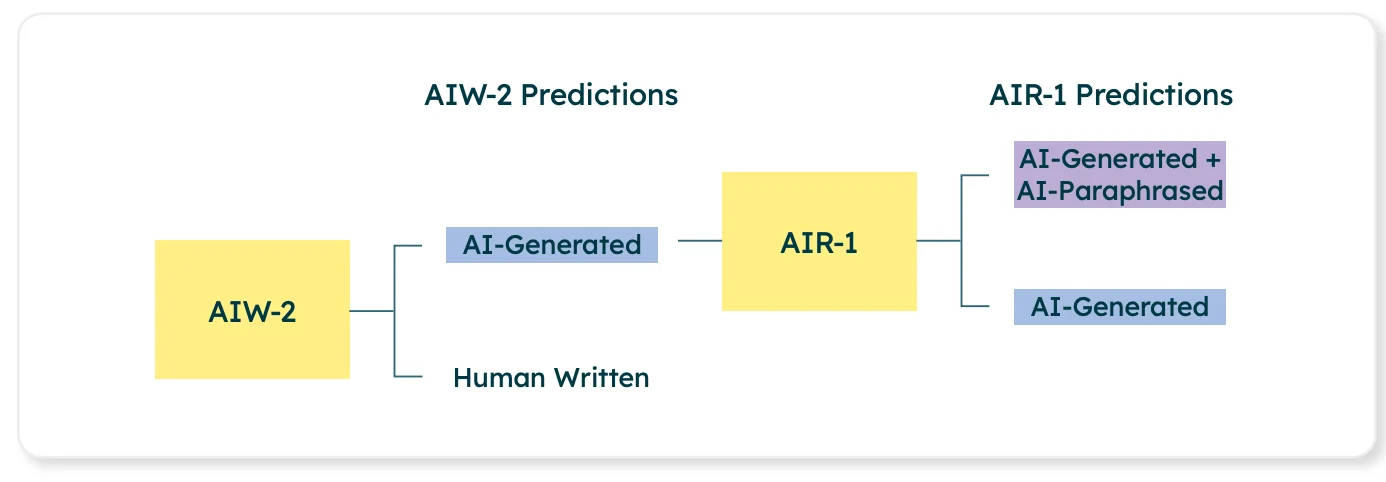

Here’s what happens behind the scenes:

First, the AIW-2 model runs its scan as usual.

If it flags 20% or more of the document as likely AI-written, then AIR-1 steps in.

AIR-1 re-analyzes those flagged sentences and looks for signs that they’ve been paraphrased by AI.

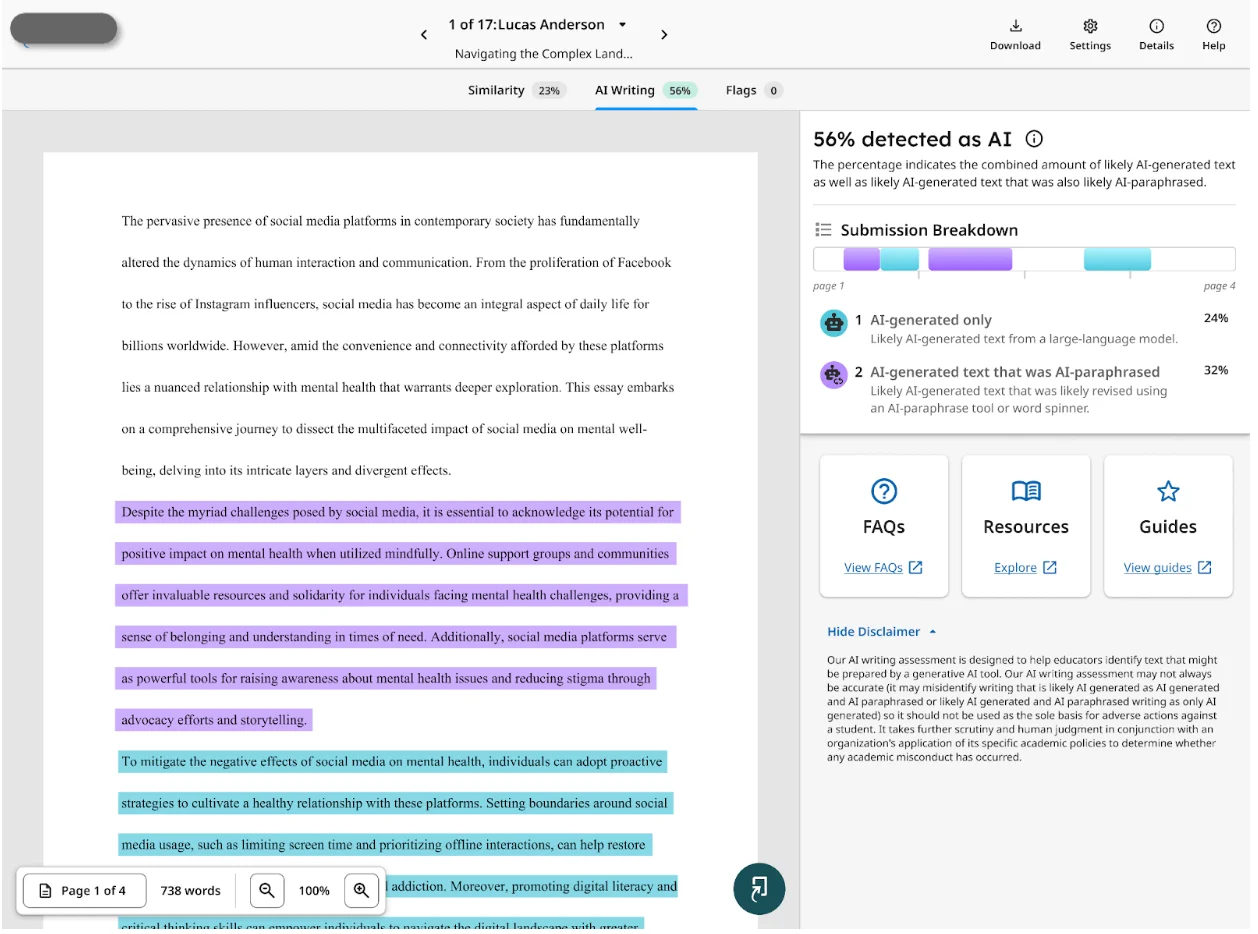

If it finds those signs, it highlights the sentence in purple in Turnitin’s AI writing report.

How It Shows Up in Reports

When AIR-1 identifies a sentence as AI-paraphrased, it marks it alongside AIW-2’s original detection. In Turnitin’s report interface, these sentences are often highlighted in purple — meaning the system thinks they’re both AI-written and paraphrased by another AI tool.

This extra level of detection helps educators better understand not just if AI was used, but how it was used — whether the student copied and pasted from a chatbot or tried to disguise it using a paraphrasing tool.

✳️ AIR-1 does not scan the entire document. It only looks at text that AIW-2 already marked as possibly AI-generated. And it never tries to paraphrase-detect text that AIW-2 thinks is human-written.

Now that we’ve met all the key tools — AIW-2 and AIR-1 — let’s talk about what kind of data and training went into building them.

4. How Were Turnitin AI Detectors Trained and Tested?

Now that we understand what AIW-2 and AIR-1 actually do, it’s fair to ask: how do we know they’re reliable?

Well, according to Turnitin, a lot of care — and a lot of data — went into training and testing these models to make sure they work as expected. Let’s break that down in simple terms.

Training the Models: Where Did the Data Come From?

To teach AIW-2 and AIR-1 how to spot AI-written or paraphrased content, Turnitin used massive datasets — but not just any text.

According to Turnitin:

The AIW-2 model was trained using a mix of AI-generated content and real, human-written academic writing. This included papers from a wide range of subjects, countries, and student demographics.

Turnitin made a special effort to include underrepresented groups, such as second-language learners and students from diverse academic backgrounds. That helps reduce bias and makes the model fairer and more accurate across different writing styles.

Importantly, AIW-2’s training data also included examples of AI-generated text that had been run through paraphrasing tools — which was key to improving its ability to catch “AI+AI-paraphrased” content.

For AIR-1, the focus was even more specific:

It was trained on a wide range of AI-paraphrased text, alongside regular human writing and pure AI content.

This helped AIR-1 learn to spot subtle clues that are unique to reworded AI — clues that traditional AI detectors often miss.

In short, these models were not just trained on examples pulled from the internet. They were carefully designed using realistic academic scenarios to match what educators and students actually deal with.

Testing the Models: How Does Turnitin Measure Accuracy?

When it comes to testing, Turnitin focuses on two core metrics:

Recall – This measures how many actual AI-written texts are correctly identified. A high recall means the model is doing a good job catching what it’s supposed to.

False Positive Rate (FPR) – This shows how often human-written text is wrongly flagged as AI. A low FPR is crucial, especially in academic settings, where a false accusation can have serious consequences.

According to Turnitin, AIW-2 keeps the document-level false positive rate under 1%, as long as it finds at least 20% of a document to be AI-generated. That’s why you’ll often see this 20% threshold mentioned in the AI report — it’s a carefully chosen cut-off point based on testing.

Why “Accuracy” Alone Isn’t Enough

Interestingly, Turnitin doesn’t use the general term “accuracy” in its reporting. Why?

Because in unbalanced datasets (for example, when most documents are human-written), even a very poor model could appear to be 99% accurate just by always guessing “human.” That would be misleading.

Instead, by focusing on recall and FPR, Turnitin provides a more honest view of how well its detection system actually performs.

Now that we understand how Turnitin’s models are trained, let’s take a closer look at how they actually analyze a student’s writing once it’s submitted. This is where things get a bit more technical, but we’ll break it down simply.

How Turnitin's AI Detector Actually Works

First, the System Breaks the Text into Small Chunks

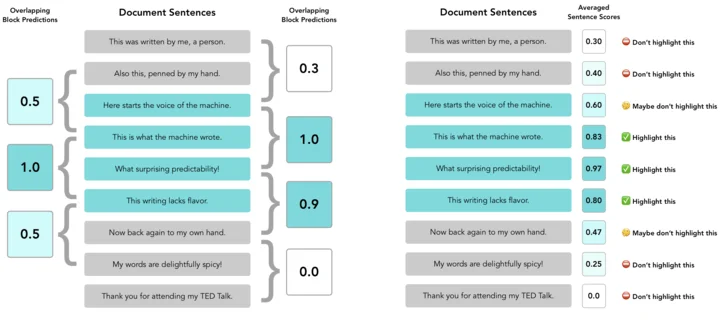

Turnitin uses a method called a segmented window approach. Basically, instead of reading the whole essay in one go, the system breaks it into small, overlapping sections — think five to ten sentences per segment.

Each of these “windows” slides through the document one sentence at a time, so every sentence ends up being analyzed within multiple segments. This gives the model different contexts to evaluate the same sentence more reliably.

Then, It Scores Each Sentence for AI Likelihood

Every segment gets a score between 0 and 1:

A score closer to 0 means the text is likely human-written.

A score closer to 1 suggests it’s more likely AI-generated.

Since each sentence appears in multiple windows, Turnitin calculates a weighted average score for each sentence. This helps smooth out any accidental misreads and gives a more stable judgment.

And as we said before, it also makes AI paraphrase judgments on sentences that have been judged to be AI-generated, which is a separate score.

Next, the System Makes a Document-Level Judgment

So how does it decide if an entire document is AI-generated?

According to Turnitin, a document is flagged only if 20% or more of its sentences are scored above the AI-writing threshold. That 20% rule isn’t random — it's based on testing that showed smaller amounts often lead to false positives. This way, Turnitin aims to be cautious and only flag work when there’s a stronger signal of AI involvement.

In other words, a paper needs to have a significant amount of AI-like content before it’s labeled as such.

Short Papers Don’t Get Checked

Another important limit: Turnitin won’t run the AI detector on documents shorter than 300 words. That’s because short texts don’t give the system enough data to make an accurate prediction. It needs a bit of content to work with — the more words, the better the analysis.

That's all about how Turnitin detect the AI content.

How Turnitin’s AI Detector Stacks Up Against Other Tools

There are plenty of AI detectors out there—some free, some paid—but Turnitin’s system stands apart in a few key ways:

Purpose-built for Academia: Unlike many general-purpose detectors, Turnitin’s models are specifically trained on real student work across subjects, languages, and writing styles. This reduces false positives and makes it more reliable in educational settings.

Dual-Model Approach: While most detectors just flag AI-generated text, Turnitin uses two models—AIW-2 for raw AI writing and AIR-1 for AI-paraphrased text—covering more ground and catching cleverly disguised content.

Transformer-Based Architecture: Many detectors rely on simpler stats like perplexity or burstiness. Turnitin’s use of advanced transformer models lets it spot subtle patterns in language, making detection smarter and more accurate.

Scale and Integration: Turnitin’s tools are embedded in learning management systems worldwide, already analyzing over 250 million papers—meaning their models continually improve with real-world data.

Transparency and Testing: Turnitin publishes detailed whitepapers and validation studies, showing their system’s performance and limitations openly—something most free detectors don’t do.

In short: Turnitin isn’t just another AI checker. It’s a robust, research-backed system designed to meet the complex demands of education, rather than just flagging AI use based on simple rules.

Turnitin vs. Other AI Detectors

Wondering if you can just use other AI detectors instead of Turnitin to check your work before submitting? Here’s the thing: Turnitin’s system isn’t easily replaceable by popular tools like GPTZero.

Turnitin trains its AI models on real student papers across a wide range of subjects and languages, so it’s finely tuned for academic writing. Plus, it’s learned from analyzing over 250 million actual submissions—something most other detectors simply don’t have. This real-world data really boosts accuracy.

Turnitin also goes a step further by using two models—one to spot AI-generated writing and another to catch AI-paraphrased sentences. While GPTZero and Quillbot offer some sentence-level highlights, they don’t match the depth and reliability Turnitin provides.

Technically, many detectors rely on simpler stats like perplexity, but Turnitin’s built on advanced transformer models that pick up on subtle language patterns, making its detection smarter.

FAQ

Q: What AI models does Turnitin use?

A: Turnitin uses two main models—AIW (AI Writing) to detect direct AI-generated text, and AIR (AI Rewriting) to spot AI-paraphrased content. The latest versions are AIW-2 and AIR-1, both powered by advanced Transformer-based deep learning.

Q: How can I avoid being flagged by Turnitin’s AI detectors?

If you’re concerned about AI detection, the best approach is to write original, well-researched content in your own authentic voice. There are also humanized tools that help improve your work without adding an AI-generated tone.

Q: Is Turnitin more accurate than free tools like ZeroGPT?

A: Yes. Turnitin’s models are peer-reviewed, tested on millions of real academic papers, and specifically tuned for student writing. In contrast, many free detectors don’t share their training data or false positive rates, and often miss sentence-level details. Tools like ZeroGPT tend to be more lenient and less precise.

Q: Can Turnitin detect writing from newer AI like GPT-4 or Gemini?

A: Absolutely. As of 2024, Turnitin’s system is designed to identify text generated by GPT-3, GPT-4, GPT-4o, Gemini, LLaMA, and other leading large language models.

Q: How accurate is Turnitin’s AI detection?

A: According to Turnitin, their AI detection is quite accurate. They maintain a false positive rate below 1% for documents where 20% or more of the text is AI-generated.

Conclusion

We’ve discussed the nitty-gritty of Turnitin’s AI checker—from how it breaks down papers into their components to how it’s trained on real student writing and AI-generated content. As AI evolves, so too does Turnitin, which is a topic that both educators and students must grapple with. At the end of the day, it’s not about catching people — it’s about maintaining trust in the work we turn in. Understanding how the tool works helps everyone use it more fairly and responsibly.